The Root Problem: Why Product Teams Struggle with Prioritization

Most product teams don’t fail because they lack ideas or talented engineers. They fail because they say “yes” too often.

The statistics are stark: 66% of products fail within two years, and 33% fail within the first year. While many factors contribute to failure, poor prioritization consistently ranks among the top causes.

The best product organizations aren’t those that build the most features. They’re the ones that master the art of saying “no” to protect focus on what truly matters. Companies like Apple, Amazon, and Stripe have built their success not on feature breadth, but on ruthless prioritization.

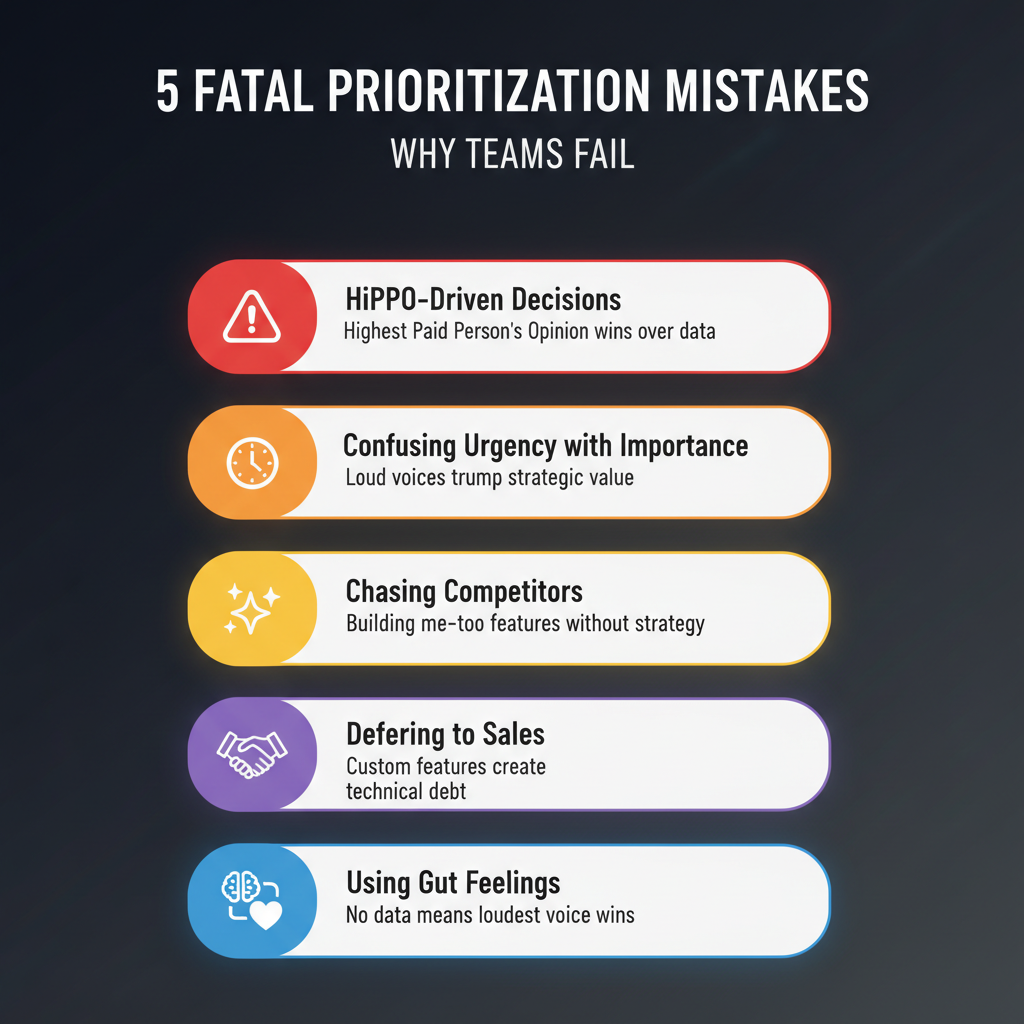

Five Fatal Prioritization Mistakes

1. HiPPO-Driven Decisions

The Highest Paid Person’s Opinion (HiPPO) syndrome kills more good products than bad code ever will. When prioritization becomes about organizational hierarchy rather than data and strategy, teams build features that solve executive pet projects instead of customer problems.

2. Confusing Urgency with Importance

The loudest stakeholder isn’t necessarily representing the most important problem. Yet many teams operate on a “squeaky wheel gets the grease” model, where whoever complains loudest gets their feature built first.

3. Building What Competitors Build

Following competitors feature-for-feature is a race to mediocrity. By the time you’ve copied their feature, they’ve moved on. Worse, you’ve invested precious engineering time building “me-too” functionality that doesn’t differentiate your product.

4. Deferring to Sales

Individual sales deals don’t make for good product strategy. Building custom features for single customers creates technical debt, increases maintenance burden, and often results in features that 95% of your user base will never use.

5. Using Gut Feelings Instead of Frameworks

Without a structured prioritization framework, decisions become political. The person with the most charisma or the loudest voice wins, regardless of data or strategic fit.

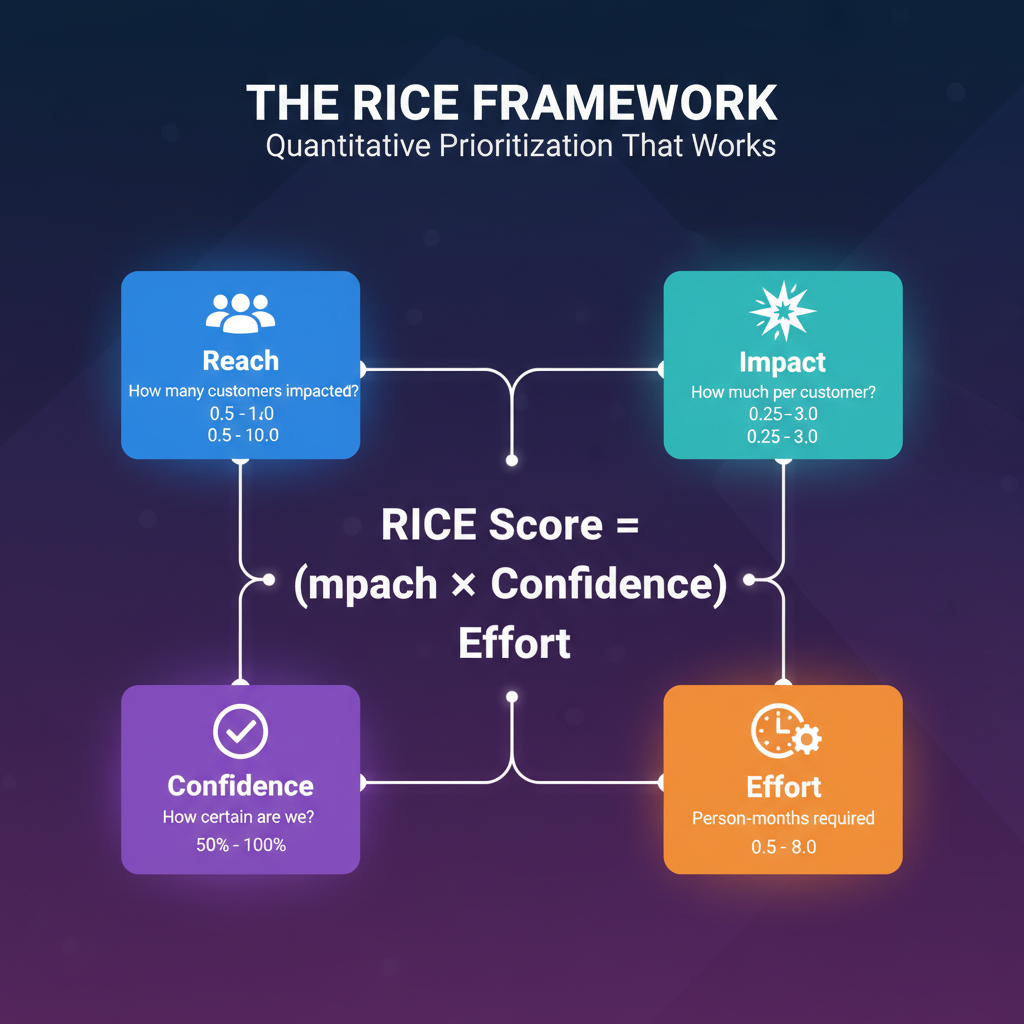

The RICE Framework: A Model That Actually Works

The RICE framework, developed by Intercom, provides a quantitative approach to prioritization that removes politics and brings objectivity to the conversation.

RICE stands for:

- Reach: How many customers will this feature impact in a given period?

- Impact: How much will this feature move the needle for each customer?

- Confidence: How certain are we about our Reach and Impact estimates?

- Effort: How much time (in person-months) will this take to build?

The formula is simple: RICE Score = (Reach × Impact × Confidence) / Effort

RICE Scoring in Practice

Let’s look at a realistic example comparing three potential features:

Feature A: Advanced Analytics Dashboard

- Reach: 3.0 (3,000 monthly active users)

- Impact: 2.0 (Major improvement per customer)

- Confidence: 80%

- Effort: 4.0 person-months

- RICE Score: 1.2

Feature B: One-Click Social Sharing

- Reach: 5.0 (10,000+ users will use this)

- Impact: 0.5 (Small but meaningful improvement)

- Confidence: 100%

- Effort: 0.5 person-months

- RICE Score: 5.0

Feature C: Custom Enterprise Integration

- Reach: 0.5 (100 users from one enterprise client)

- Impact: 3.0 (Massive for this one client)

- Confidence: 100%

- Effort: 8.0 person-months

- RICE Score: 0.19

Despite Feature C having “massive impact,” its limited reach and high effort make it the lowest priority. Meanwhile, Feature B—seemingly simple—has the highest RICE score because it affects many users with minimal effort.

Beyond the Framework: Four Principles for Success

A framework alone isn’t enough. Here are four principles that separate teams that successfully implement RICE from those that struggle:

1. Build a Data-Driven Scoring Culture

Don’t guess at Reach, Impact, or Confidence. Track feature usage, run customer surveys, analyze support tickets, and monitor competitor moves. The more data you have, the more accurate your scores become.

2. Align Every Feature to Your Three-Year Vision

RICE scores tell you how to prioritize. Your product vision tells you what to prioritize. Every feature should connect to your long-term strategy.

3. Review and Re-Score Regularly

Markets shift. Customer needs evolve. Competitor landscapes change. Hold quarterly prioritization workshops to re-score your top 20 features based on new data.

4. Communicate Transparently

Share RICE scores with stakeholders. When you say “no” to a feature, explain the tradeoffs. Transform “no” into “not now, and here’s why.”

The Bottom Line

Your roadmap isn’t defined by what you build. It’s defined by what you choose not to build.

RICE won’t make every prioritization decision easy, but it will make them objective, defensible, and strategic. It shifts conversations from “I think we should build this” to “Here’s the data showing why this scores higher than alternatives.”

The teams that win aren’t those with the longest feature lists. They’re the ones that ruthlessly protect focus on the features that matter most.